More pages: 1

2

A couple of notes about Z

Tuesday, April 21, 2009 | Permalink

It is often said that Z is non-linear, whereas W is linear. This gives a W-buffer a uniformly distributed resolution across the view frustum, whereas a Z-buffer has better precision close up and poor precision in the distance. Given that objects don't normally get thicker just because they are farther away a W-buffer generally has fewer artifacts on the same number of bits than a Z-buffer. In the past some hardware has supported a W-buffer, but these days they are considered deprecated and hardware don't implement it anymore. Why, aren't they better? Not really. Here's why:

While W is linear in view space it's not linear in screen space. Z, which is non-linear in view space, is on the other hand linear in screen space. This fact can be observed by a simple shader in DX10:

float dx = ddx(In.position.z);

float dy = ddy(In.position.z);

return 1000.0 * float4(abs(dx), abs(dy), 0, 0);

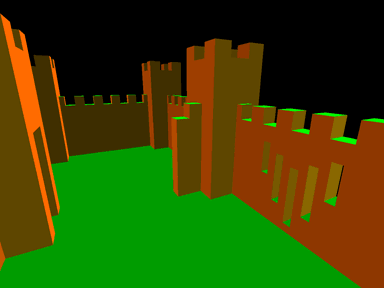

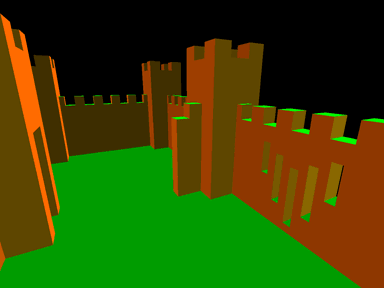

Here In.position is SV_Position. The result looks something like this:

Note how all surfaces appear single colored. The difference in Z pixel-to-pixel is the same across any given primitive. This matters a lot to hardware. One reason is that interpolating Z is cheaper than interpolating W. Z does not have to be perspective corrected. With cheaper units in hardware you can reject a larger number of pixels per cycle with the same transistor budget. This of course matters a lot for pre-Z passes and shadow maps. With modern hardware linearity in screen space also turned out to be a very useful property for Z optimizations. Given that the gradient is constant across the primitive it's also relatively easy to compute the exact depth range within a tile for Hi-Z culling. It also means techniques such as Z-compression are possible. With a constant Z delta in X and Y you don't need to store a lot of information to be able to fully recover all Z values in a tile, provided that the primitive covered the entire tile.

These days the depth buffer is increasingly being used for other purposes than just hidden surface removal. Being linear in screen space turns out to be a very desirable property for post-processing. Assume for instance that you want to do edge detection on the depth buffer, perhaps for antialiasing by blurring edges. This is easily done by comparing a pixel's depth with its neighbors' depths. With Z values you have constant pixel-to-pixel deltas, except for across edges of course. This is easy to detect by comparing the delta to the left and to the right, and if they don't match (with some epsilon) you crossed an edge. And then of course the same with up-down and diagonally as well. This way you can also reject pixels that don't belong to the same surface if you implement say a blur filter but don't want to blur across edges, for instance for smoothing out artifacts in screen space effects, such as SSAO with relatively sparse sampling.

What about the precision in view space when doing hidden surface removal then, which is still is the main use of a depth buffer? You can regain most of the lost precision compared to W-buffering by switching to a floating point depth buffer. This way you get two types of non-linearities that to a large extent cancel each other out, that from Z and that from a floating point representation. For this to work you have to flip the depth buffer so that the far plane is 0.0 and the near plane 1.0, which is something that's recommended even if you're using a fixed point buffer since it also improves the precision on the math during transformation. You also have to switch the depth test from LESS to GREATER. If you're relying on a library function to compute your projection matrix, for instance D3DXMatrixPerspectiveFovLH(), the easiest way to accomplish this is to just swap the near and far parameters.

Z ya!

Marc Olano

Wednesday, April 22, 2009

Worth reading "Minimum triangle separation for correct z-buffer occlusion" by Kurt Akeley and Jonathan Su from Graphics Hardware 2006. Somewhat surprisingly, image coordinate precision in rasterization can have a bigger impact on depth buffering errors than z-buffer precision.

Pat Wilson

Sunday, June 28, 2009

I have nothing useful to add except: Thank you for this explanation; it is the best I have ever seen. Now I also understand the hi-z errors I have been confused about...

Thatcher Ulrich

Thursday, June 3, 2010

Hm, I think this article is mostly misinformation. The in.position.z in your shader is NOT what is written to the depth buffer, and therefore I think the screenshot is misleading. The hardware normally computes in.position.z/in.position.w and writes that to the depth buffer. Both z and w are linear in camera space, but z/w is definitely not linear in camera space and it's definitely not linear in screen space either.

I'd be curious to see the screenshot if you try computing in.position.z/in.position.w.

I agree about reversing the depth when using floating-point depth, but not when using fixed-point depth.

Here's a pretty good article which covers these issues and AFAIK is accurate: http://www.gamasutra.com/blogs/BranoKemen/20091231/3972/Floating_Point_Depth_Buffers.php

Summary: the ideal thing to use for depth is actually a function of log(camera_z) but that can be approximated using the reversed floating point z buffer.

deadc0de

Wednesday, August 18, 2010

Thatcher: I'm not 100% sure but I think the catch is that he's using SV_POSITION, and not a position copy ala DX9. I don't have a dx10 pc a home (well... I have a macbook) to confirm this and the sdk documentation is not clear, but googling seems to confirm that SV_POSITION is in viewport space.

I still agree that there is a lot of misinformation, i.e. on the way the hi-z works and on the benefits of using derivatives for post processing...

Humus

Friday, September 17, 2010

Thatcher Ulrich,

the information is correct, but you are assuming In.Position.z contains the interpolated z value, but it contains the interpolated z/w value, i.e. the same as written to depth buffer, and .w also does not contain w, but 1.0 / w. In DX9 .zw of VPOS was not accessible. When I compare "Z" and "W" in this article I mean the things referred to in the names "Z-Buffer" and "W-Buffer", rather than the interpolated z and w values.

As for reversing depth buffer with fixed-point, did you try it? I did. It improved things noticably.

Thatcher Ulrich

Thursday, February 3, 2011

Sorry, you're right, I was applying OpenGL definitions to your DX10 description. My mistake.

I agree that running the floating-point depth is the good, practical thing to do.

Re inverting the fixed point depth buffer -- I haven't tried it. You say it "improves the precision on the math during transformation" so I'll take your word for it, although it sounds odd to me. But, it doesn't improve the relative precision in the depth buffer, right?

Thatcher Ulrich

Thursday, February 3, 2011

I meant to say: I agree that running the floating-point depth BACKWARDS is the good, practical thing to do.

Zhugel_007

Monday, March 14, 2011

Really nice article. it explains lots of questions in my head. One more question though

I understand what you've explained in your article, but what if i force the Z to be linear. (play with the projection matrix by dividing fFar:

mProj._33/=fFar;

mProj._43/=fFar;

and multiply W in vertex shader:

float4 vPos = mul(Input.Pos,worldViewProj);

vPos.z = vPos.z * vPos.w;

Output.Pos = vPos

Would there be any visual difference compared with using the hyperbolic Z in the final rendering result? I did this test, and visually, it looks the same as using the hyperbolic Z. Also, would this break the Hi-Z? (looks ok in pix though)

More pages: 1

2