More pages: 1

2 3

Geometric Post-process Anti-Aliasing

Saturday, March 12, 2011 | Permalink

I have a new demo up demonstrating a post-process antialiasing technique. Antialiasing algorithms generally come with four letter acronyms, like MSAA, SSAA, CSAA, MLAA, SRAA etc., so in that tradition I think GPAA (Geometric Post-process Anti-Aliasing) would be a decent name for this one. It works by using the actual geometric information in the scene to figure out how to blend pixels with the neighbors.

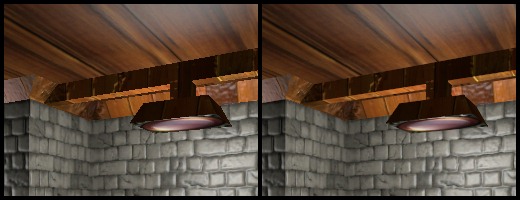

Here's a comparison before and after applying GPAA:

Tom

Sunday, March 13, 2011

Looks great! Got a higher res image to look at?

Are you using a depth-buffer for this effect?

TomF

Sunday, March 13, 2011

Interesting! The choice of where to AA is similar to "Discontinuity Edge Overdraw" by Sander et al: http://research.microsoft.com/en-us/um/people/hoppe/ But the way you deal with it is very different.

I'm sure I remember a paper somewhere that generated a screen-space buffer of the specific places where the "smudging" needed to be done, rather than doing each line separately. So much like the "future work" you suggested of a single render of the scene, rendering pixels to one buffer, edges to another, then the smudging pass is a single pass over the whole screen. Of course I can't remember where I saw it now - my brain is old.

Why do you say you're "copying" the first pass to a texture? It's not AA, so you should just be able to render directly to the texture, no?

Ben

Sunday, March 13, 2011

It looks really really good! I'm curious to see scenes with more geometric detail.

Humus

Sunday, March 13, 2011

Tom: The depth-buffer is not used other than removing lines that are hidden. No particular information is extracted from it during antialiasing, but the deferred rendering part of the demo does of course read it.

TomF: I was unaware of that paper, but the idea is indeed quite similar. The difference is I blend as a post-step over a final image whereas AFAICT he's rendering antialiased lines and doing the shading of both sides of the edge.

WheretIB

Sunday, March 13, 2011

Since the depth-buffer is used to remove lines that are hidden, I figure that it doesn't work with transparent surfaces?

Humus

Sunday, March 13, 2011

TomF: Forgot to answer the copying question ... it needs the backbuffer as an input while also rendering to it. If the hardware could read from and write to the same surface in the same draw call there wouldn't have to be a copying needed. In DX10 it's explicitely disallowed, but I guess it might work on same hardware in DX9 and I suppose it might work on consoles.

WheretIB: Well, antialiasing edges of transparent surfaces should work fine I think (haven't tested though). However, background edges behind a transparent surface would smear pixels on the transparent surface. This may not be so visible though. But in any case it should be possible to separate the rendering into two passes, one for opaque followed by its GPAA pass, then transparent surfaces on top of that and then GPAA of that.

zhugel_007

Tuesday, March 15, 2011

Actually, because the depth buffer is not used, this method would not work for alpha-test object.

zhugel_007

Tuesday, March 15, 2011

Actually, because the depth buffer is not used, this method would not work for alpha-test object.

More pages: 1

2 3