More pages: 1 ...

11 ...

14 15 16 17 18 19

20 21 22 23 24 ...

31 ...

41 ...

48

Ubuntu rocks!

Thursday, March 19, 2009 | Permalink

It's been a while since I last tried Linux, for two reasons. First is the OpenGL debacle that has turned this API much less interesting to me, and with all my demos written in DX10 there hasn't been much need to ever boot into Linux. The other reason is that for a long time AMD didn't provide any drivers for my particular GPU, a Radeon HD 3870x2. Oddly enough, the release notes for the Linux drivers still say that the HD 3870x2 is not supported, although clearly it worked for me now, so I suppose this is just a documentation error. How long it's been working I have no idea.

Anyway, so I wanted to try some stuff in Linux recently, primarily the SSE intrinsics. In the past I've used Gentoo, which is more of a power user distribution, but I couldn't be bothered to use that again since it's quite a lot of work to set i up, besides it's not being updates as much as it used to be these days. Ubuntu on the other hand seems to be the most trendy distribution now, so I decided to give it a try. I have to say I'm quite impressed.

It gave me a quite "Windows-like" experience. First I installed it on my laptop. It installed without problems and once I was in the OS it notifed me about updates which it downloaded and installed for me. Drivers for all my hardware was set up automatically and everything just worked out of the box. It even asked me whether it should install the proprietary drivers for my video card and set that up automatically for me as well. Once I got down to compiling stuff and needed libraries I was able to find and install everything I needed with a few searches in Adept.

In the past I would have said that the big problem for Linux is that it's an OS for geeks made by geeks and that for instance my mom who has big enough trouble with Windows would not be able to use it. And frankly, even if you're a geek, who wants to hack around in a bunch of config files anyway? I don't know how much of this is Ubuntu vs. Gentoo, or just the general progress of Linux in the last year or so, but with the experience I had I have to say that I would now be comfortable with recommending Linux to anyone. If you have never worked with either Windows or Linux before and you're starting entirely from scratch, I don't think it would be any harder to get started on Linux than on Windows.

Anyway, this was on my laptop. I installed it there primarily because I didn't think drivers for my HD 3870x2 existed, because that's what the driver release notes say anyway, whereas the Mobility HD 3650 should be fine. So given this positive experience I decided to give it a try on my desktop machine as well. Especially since I didn't want to have to copy files back and forth between my computers. Just for convenience I decided to use

Wubi, which basically is an Ubuntu installer you run from Windows. It really can't be simpler than that. Download an exe, double-click and off you go. Once it's done you have a fully working complete Linux installation. Not a virtual machine like "Linux inside Windows" or anything like that, but a standard OS you boot into, except its file system is a large file on your Windows drive. And if you don't like it you can also uninstall it like any other application. With Wubi any form of inconvenince or danger of changing the partitions on your harddrive is eliminated. There's really no excuse for not giving Linux a try anymore. So I gave it a shot, and it worked fine, and to my surprise it even installed drivers for my GPU, which I thought didn't exist. And they worked fine too. The Wubi installation seems to differ from the regular installation though in a few ways. I found I had to change a few settings here and there, like changing from single-click to double-click for opening files and so on. Also it seems the packages Adept knows about differ. The standard installation had all dev packages I needed, whereas I had to resort to typing apt-get on the commandline to install some packages in the Wubi installation. I suppose Adept has some sort of index of packages it knows about and the Wubi installation only includes those a normal user would use, or something like that. I haven't used Ubuntu before or Adept so I really don't know. Other than those minor annoyances, the Wubi installation worked really fine too.

Two thumbs up for the Linux community.

[

4 comments |

Last comment by Humus (2009-03-21 00:03:14) ]

DPPS (or why don't they ever get SSE right?)

Monday, March 16, 2009 | Permalink

So in my work on creating a new framework I've come to my vector class. So I decided to make use of SSE. I figure SSE3 is mainstream now so that's what I'm going to use as the baseline, with optional SSE4 support in case I ever need the extra performance, enabled with a USE_SSE4 #define.

Now, SSE is an instruction set that was large to begin with and has grown a lot with every new revision:

SSE: 70 instructions

SSE2: 144 instructions

SSE3: 13 instructions

SSSE3: 32 instructions

SSE4: 54 instructions

SSE4a: 4 instructions

SSE5: 170 instructions (not in any CPUs on the market yet)

Why all these instructions? Well, perhaps because they can't seem to get things right from the start. So new instructions are needed to overcome old limitations. There are loads of very specialized instructions while arguably very generic and useful instructions have long been missing. A dot product instruction should've been in the first SSE revision. Or at the very least a horizontal add. We got that in SSE3 finally. Yay! Only 6 years after 3DNow had that feature. As the name would make you believe, 3DNow was in its first revision very useful for anything related to 3D math, despite its limited instruction set of only 21 instructions (although to be fair it shared registers with MMX and thus didn't need to add new instructions for stuff that could already be done with MMX instructions).

So why this rant? Well, DPPS is an instruction that would at first make you think Intel finally got something really right about SSE. Maybe they has listened to a game developer for once. We finally have a dot product instruction. Yay! To their credit, it's more flexible than I ever expected such an instruction to be. But it disturbs me that they instead of making it perfect had to screw up one detail, which drastically reduces the usefulness of this instruction. The instruction comes with an immediate 8bit operand, which is a 4bit read mask and a 4bit write mask. The read mask is done right. It selects what components to use in the dot product. So you can easily make a three or two component dot product, or even use XZ for instance for computing distance in the XZ plane. Now the write mask on the other hand is not really a write mask. Instead of simply selecting what components you want to write the result to you select what components get the result and the rest are set to zero. Why oh why Intel? Why would I want to write zero to the remaining components? Couldn't you have let me preserve the values in the register instead? If I wanted them as zero I could have first cleared the register and then done the DPPS. Had the DPPS instruction had a real write mask we could've implemented a matrix-vector multiplication in four instructions. Now I have to write to different registers and then merge them with or-operations, which in addition to wasting precious registers also adds up to 7 instructions in total instead of 4, which ironically is the same number of instructions you needed in SSE3 to do the same thing. Aaargghh!!!!

[

9 comments |

Last comment by JKL (2009-04-07 23:15:21) ]

OMG, Multi-Threading is Easier Than Networking

Sunday, March 15, 2009 | Permalink

A friend sent me a link to this Intel paper today:

OMG, Multi-Threading is Easier Than Networking

If you think the title is somewhat odd for a technical paper, wait until you read the actual paper. It contains cats. What more can I say?

Content-wise the paper is an excellent read for anyone who wants to get started with multi-threaded programming. I have to say that the lack of academic mumbo jumbo is really refreshing, which alone made the paper well worth the time to read, even though it didn't contain a lot of unique information. I wish more papers were written like this. Short and to the point. Lots of info that's instantly useful. Unlike a lot of the academic papers that seem to be written primarily with the intent of making the author seem good.

[

1 comments |

Last comment by Jackis (2009-03-16 12:05:30) ]

Whooha. Fire!

Wednesday, March 11, 2009 | Permalink

About 200m from here. Tantogården in flames. Or mostly smoke from where I could see it. Several buildings damaged according to reports.

Well, I guess that settles a thing or two. The politicians wanted to demolish it, despite protests. Now they don't have to. How convenient.

[

2 comments |

Last comment by Humus (2009-03-15 21:20:08) ]

New demo

Saturday, February 28, 2009 | Permalink

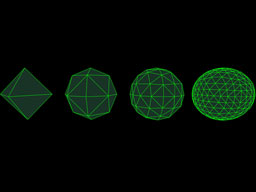

It's been a while, but I have finally added another demo to my site. Yay!

Those of you wishing for some pretty eyecandy will be disappointed though. This demo features green spheres.

But it's pretty cool though if you're into shader programming. It shows how to emulate a stack and recursive functions in a shader in DX10.

[

11 comments |

Last comment by Overlord (2009-03-06 00:33:11) ]

Yay!

Thursday, February 26, 2009 | Permalink

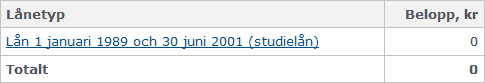

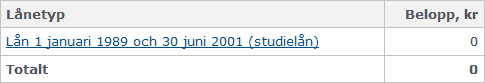

In the fall of 1998 I enrolled at university. From that time I've had a small study loan. Now more than 10 years later I've finally paid it back.

Feels great to finally finish this chapter of my life. No more debt for me! Oh wait, I have a mortgage ...

Funny enough, for a long time I could've paid it back at any time. But study loans are subsidized in Sweden, so it never made much sense for me to pay back any more than they asked for. It was better for me to just shove my money into a savings account since that gave me a higher interest rate. And for several years after I finished my studies they didn't even ask me to pay any. I suppose that's because while I lived in Canada I never had an income registered in Sweden. I think 2007 was the first year they required me to start making small payments. Anyway, I never paid more than I had to simply because it made no sense to do so. Now with the financial crisis the interest rates are dropping heavily. Meanwhile, the interest rate for study loans actually increased for 2009, for some obscure reason. So suddenly the interest rate for my mortgage is lower than the interest rate on the study loans, and that's not even counting the fact that my mortgage is tax deductible, unlike the study loan. So I had some money I had put aside for making a mortgage payment, but given this sudden change of things it made more sense to pay back the study loan instead. So I did.

[

1 comments |

Last comment by yosh64 (2009-02-28 15:59:05) ]

Another HDR camera

Thursday, February 19, 2009 | Permalink

Whoohaa, wouldn't have expected another company to get into the HDR field so quickly, but Ricoh just

announced the CX1 which also sports this features. They are taking a different approach though. Fujifilm went with a native HDR sensor, whereas Ricoh does the traditional combined exposures technique, except the camera does it automatically instead of relying on the user to do a lot of manual work at the computer to combine the images. It'll be interesting to see how well this turns out. It could potentially result in better image quality, but it could also be prone to mismatching exposures if you're shooting handheld, depending on the delay between the shots. It would also be nice if the camera did more than just two shots for greater dynamic range. With two vendors launching their HDR cameras so close to enough other I suspect we'll see more coming in the near future. In a few years we may be in a position where doing exposure digitally as a postprocess becomes as natural as doing white balance digitally.

Speaking of white balance, another very interesting feature of the camera is "Multi-Pattern Auto White Balance". It's a quite common problem that different areas of a scene is lit with different light sources and require diffent white balance to look good, for instance shooting indoor with a window visible. The indoor and outdoor parts of the image will require different white balance settings due to the difference in light temperature. But cameras apply one white balance setting to the whole scene. So either the outdoor will look very blue or the indoor very yellow, or neither will look correct. This camera is supposedly capable to doing white balance locally. How well this will work will be very interesting to see. From the description it sounds like it's applying white balance on a tile basis, so I suppose areas with pixels affected by both light sources may still look bad.

[

0 comments ]

HDR cameras

Monday, February 16, 2009 | Permalink

HDR has been kind of a buzz-word in photography for the last few years. Some of this may be related to that HDR has also been a much talked about subject in GPU rendering in the last few years as well. There are techniques for taking HDR photos with standard camera equipment using multiple exposures. We've also seen photographic packages such as Photoshop adding this functionality. Meanwhile there have from time to time been talks about new sensor technology for HDR photography, although little has seen the light of day.

Last September Fujifilm

announced their new Super CCD EXR sensor promising improved dynamic range. Now that's something we've heard before, so I didn't pay much attention to it. Just recently they released the

F200EXR camera based on this sensor. It appears this might just be the first HDR capable camera on the market. I don't think it'll produce actual HDR images, but it can capture an 800% expanded range, or a 0..8 range if you will, tonemapped to a nice looking image where other cameras would either have to underexpose or get blown out highlights. The camera accomplishes this through pixel binning where different sensor pixels capture different exposure ranges. As a result, you'll only get a 6MP image instead of 12MP when using this technique, a tradeoff I'm more than willing to do. 12MP is already far beyond what's meaningful to put into camera anyway, particularly a compact.

Since the camera is new there aren't many reviews for it out there, but I've at least found

this Czech site which has some samples. If those are representative of what this camera can do this may very well be my next compact camera.

A word of caution though. Looking in the EXIF tags of the pictures it seems they aren't all straight from the camera. Some have the camera name listed, others have "Adobe Lightroom", suggesting that they may have been processed in some way. The first sample pair lists the camera name though, so I'm going to assume at least those are unprocessed.

In any case, this is a very exciting development. I hope to see similar technology from other vendors as well, and I would love to see this stuff in an SLR.

[

10 comments |

Last comment by lone (2009-03-28 16:24:49) ]

More pages: 1 ...

11 ...

14 15 16 17 18 19

20 21 22 23 24 ...

31 ...

41 ...

48