More pages: 1 ...

11 12 13 14 15 16

17 18 19 20 21 ...

31 ...

41 ...

48

Updated the particle trimmer

Sunday, May 24, 2009 | Permalink

I've made the particle trimmer a bit more robust, a bit faster, and added a few extra features. I also made it handle some cases with a perfect circle better where you get a lot of edges in the convex hull. Before attempting to find the optimal polygon it'll reduce the hull size to at most a provided number of edges (default is 50), which should make it complete in a relatively short amount of time even for 8 vertices. The reduction in efficiency of doing this is either zero or in the worst case a tiny fraction of a percentage point in my tests.

The tool can now also scale and bias the result to put the coordinate in the range you want it or perhaps just to flip the y coordinate.

I've also added a dilate filter option so that the texture can initially be dilated a specified number of times. This could be useful if there's a sharp edge rather than a smooth gradient towards the threshold value and the texture is filtered and/or mipmapped, in which case you might need a more conservative trimming to not get a visible cut.

The tool should now also handle non-square textures better and the computations are now done in texel units rather than in normalized coordinates. Normalization is instead done on the final results, which are then scaled and biased with the provided values.

Update 2009-05-27:

I've updated the tool again because the last release was kind of broken with atlases. It should now work properly.

[

5 comments |

Last comment by Remage (2009-07-07 17:13:03) ]

New particle trimming tool

Thursday, May 21, 2009 | Permalink

GPUs continue to get more powerful at an amazing rate. The performance boost is not evenly distributed though. While ALU power is shooting through the roof some other parts of the chip are lagging behind. Most notably lagging are the ROPs. A Radeon HD 4890 has 16 ROPs, which is the same as even an old X800XT. This means that the only performance boost is from higher clocks. So while the HD 4890 has roughly 15x raw ALU power (if my math is right) it only has 70% higher fillrate than the X800XT. This of course makes sense since we're no longer in a "more pixels" race, but it's all about "better pixels" these days. The monitor resolutions aren't going up very fast, in fact, we had a drop with the transistion from CRT to LCD and I suppose we're now roughly back to where we were before.

The relatively low ROP performance can be a problem though when dealing with things like particle systems which can fill large portions of the screen with a lot of overdraw and often only uses a trivial shader. To deal with this people have come up with ideas such as rendering to a smaller buffer and then merging the result with the main buffer, but there are also simpler solutions like simply using fewer and denser particles.

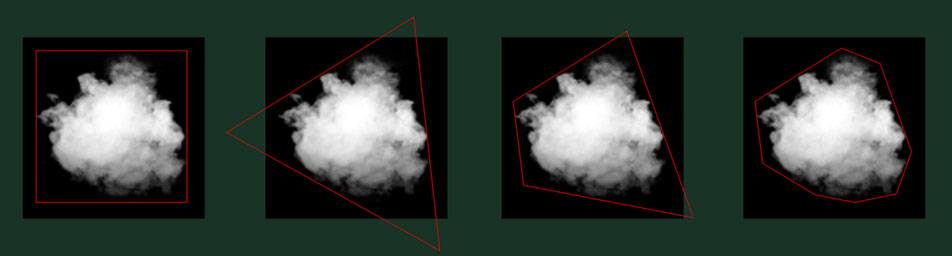

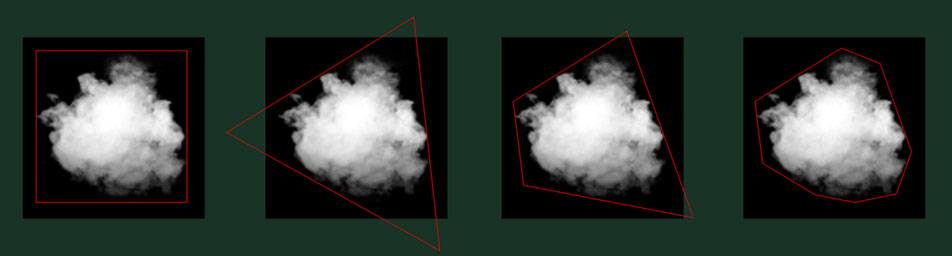

Particles are often rendered as simple quads. This is particularly wasteful of our limited ROPs. It may not seem all that bad, but a relatively normal particle texture can contain a surprisingly large amount of empty space. Take for instance this smoke puff I found in the darkest corners of my texture collection. It may look like less, but half of it is actually empty space, which means that we're rendering twice as many pixel as we have to.

So the first approach at dealing with this situation is to shrink the quad to the bounding box of the particle. It may looks like a small gain, but that's actually an over 30% save. However, most particles aren't shaped like aligned rectangles, so we can optimize this further. So this afternoon I wrote a tool that takes a particle image as input and generates an optimized polygon enclosing the particle. It can be downloaded from the

Cool stuff section. How much difference does it make use an optimize polygon over the naive approach? Quite a lot actually.

In fact, the triangle case above is nearly as efficient as the naive rectangle, whereas the optimized quad shaves off another 10 percentage points. There's of course a diminishing return from using more vertices, but depending on the shape of the particle, it could be worth going higher. This is the result from this particular smoke puff:

Original: 100%

Aligned rect: 69.23%

Optimized 3 verts: 70.66%

Optimized 4 verts: 60.16%

Optimized 5 verts: 55.60%

Optimized 6 verts: 53.94%

Optimized 7 verts: 52.31%

Optimized 8 verts: 51.90%

So how is the tool doing its magic? First the source image is thresholded so that you have a bitmap of zeros and ones. Then it loops through the pixels again and adds all points that are ones into a convex hull that's continuously updated as new points are added. In order to not throw tens of thousands of points at it I'm doing a basic check with neighboring pixels so I'm only adding potential corner pixels. If the number of neighbors that are set is 5 or more it's either in the middle of a straight edge or at a corner that's pointing inwards and thus is not added. This reduces it to hundreds of points to consider. After this pass we have a convex hull enclosing the particle. For this smoke puff it had 23 edges in the end, so it appears to not get overly complex in normal cases. Finally it goes over all permutations of the edges given a provided target vertex count, checks for each permutation that a valid fully enclosing polygon can be constructed with those edges, and for all valid ones finds the one that has the smallest area. As always, the source code is included.

I haven't tested this tool extensively yet, so it won't surprise me if there are bugs or if it's not 100% robust, but it seems to work very nicely and provides the resulting polygon as good as instantaneously with the textures I have tested. I also haven't proven that the result is actually the optimal solution, but if it's not, it is probably close to optimum in real world scenarios.

If anyone finds this tool useful and plans to use it, or have any feedback, just let me know.

[

10 comments |

Last comment by Humus (2009-06-02 21:43:30) ]

Some thoughts on the compute shader and the future

Saturday, May 16, 2009 | Permalink

GPGPU has been a buzz-word for a while now. The first serious attempts in this area came around 2005/2006 with shader model 3.0 cards, and while the potential has always been great, up until now it's basically been a field for a small amount of researchers with very specialized applications. One of the problems has been that to use the GPU for general computing you had to go through graphics APIs like DirectX and OpenGL. These were designed for graphics and not for general computations. To really use a GPU to it's full potential you often needed more low-level access to the hardware. This resulted in vendor specific APIs like CTM, Stream SDK, Cuda etc. From the original stumbling attempts the technology in this field has moved at an amazing pace, and while GPGPU is still not exactly mainstream it's just a matter of time now before it is. The main driving forces behind this is the compute shader in DX11 and OpenCL. With vendor agnostic APIs and straightforward interaction with game graphics, we are likely to see more generic use of the GPU in future games. Most games will probably still stick to DirectX, so the DX11 compute shader will probably be the most relevant API for game developers, whereas I think researchers and general application developers will probably prefer OpenCL.

A couple of interesting things about the compute shader is that while the programming approach is radically different from traditional vertex and pixel shader programming, the underlying hardware hasn't radically changed to accomodate this change in API. It's mostly about using existing hardware capabilities in a smarter way. Yes, there are features being added and hardware of course continues to get more flexible, but the radical change is in how we program the GPU, rather than in how the GPU itself works. This is illustrated by the fact that DX11 adds a compute shader 4.0/4.1 for existing DX10 and DX10.1 hardware.

So what's separating a GPU from a CPU? How come a GPU generally is able to reach it's full potential and extract close to theorethical max performance whereas a CPU rather rarely reaches anywhere close to it's theorethical performance? The difference is in how it approaches long latency operations, in particular memory accesses. When a CPU needs a value from memory it will stall waiting for the value to arrive and then when it's available continue execution. In order to avoid stalls happening all the time CPUs have large caches so that for frequently accessed data the huge stalls can be avoided. In fact, these days CPUs contain more cache than computing logic. GPUs on the other hand are mostly logic and little cache. While there are some small caches to improve performance of localized accesses, the main approach is to hide latency of memory accesses through threading. Basically when a GPU hits a memory access, such as a texture fetch, it won't stall waiting for it to arrive, instead it'll just switch to another thread and continue working on that one. And when that thread hits a memory access, it'll switch to the next. At some point it has launched as many threads as it can run simultaneously, and will return to the first thread again. At that point chances are the memory request has finished and the results are readily available in the destination register. Because of this approach GPUs unlike CPUs need a very large register set to hold the active values of all threads in flight. The more registers (GPRs) that a shader uses the fewer threads a GPU can fit into the register set. Thus the number GPRs can have a significant impact on performance since fewer threads means a greater chance that by the time you're returning to a thread issuing a memory request the request may not yet be complete.

A couple of side notes:

While I don't think it was ever publicly documented what size the register set of the NV30 was, it's probably the case that the notably poor performance of this chip was mainly down to a small register set, which would also be why the Cg compiler at the time generally quite heavily traded ALUs for fewer GPRs in shaders.

There are also some GPU-like approaches in the CPU world. For instance Intel's HyperThreading also hides latencies through threading, hence the name of the technology. Instead of idling while memory request finishes it'll switch to another thread. So a single core can appear as two logical cores and run two different threads and switch between the two as it's waiting for memory.

The huge register set of GPUs also has an equivalent in the SPUs in Cell. It does not have the automatic threading of GPUs though, but you could manually emulate the behavior of GPUs though.

So what is a thread in the context of GPUs? It's basically an instance running the shader, for example a pixel in a pixel shader, or a vertex in the vertex shader. While GPUs always did threading this has been implicit and hidden from the developer. It's not very relevant in the programming model of vertex and pixel shaders. Each thread was completely independent of all other threads anyway. What the compute shader does is make threading a little bit more explicit. In the shader you tell the dispatcher how many threads you want in a threadgroup and more importantly you can share data between threads in a threadgroup. So while each thread get their own registers as usual there's also some registers that are shared between threads in a threadgroup. Given that they are shared it does not increase the register pressure by much. The biggest chunk will still generally be the local registers for each individual thread. And in practice you'll probably see the number of local registers needed go down since your previously duplicated registers can now be shared with some care from the developer. As a result, the register pressure would go down, potentially increasing performance.

So with this long introduction, what can we expect of future hardware and software? For developers the initial challenge will be the wrap their heads around the compute shader model and understand how it maps to the underlying hardware. The vertex and pixel shader model is easy and intuitive. The compute shader not so much. The good news is that developers that know how to write a good compute shader likely also have a better understanding of the hardware and will probably also write better vertex and pixel shaders.

Once you're getting into the idea of explicit threading on the GPU you will probably realize that threading on the GPU, like threading on the CPU, in addition to being able to extract additional performance also opens up for a lot of shooting yourself in the foot. The joy of race conditions is coming to the GPU. Horray! So whereas any working pixel shader written today can be expected to work for any future hardware, if you mess up with the compute shader it could break on future hardware. For instance if you forgot a sync point, or accidentally is writing results to the same memory address from two different threads, the timing on current hardware could be such that it works by accident anyway, whereas newer hardware gets slightly different timing and the end result in shared registers or memeory becomes different. The good news is that if you're doing graphics you'll probably notice most race conditions much easier than on CPU code because for instance some strange flickering might occur randomly. But chances are that at some point a game will ship with an undetectedly broken compute shader that breaks on future hardware. I'm going to guess that by say 2012 we'll see some games from 2010/2011 break on the latest and greatest GPU.

Another important aspect of this added flexibility of the hardware is how it will change the work balance of the GPU. Over the years the ALU:TEX ratio has slowly increased. Shaders get more and more compute bound and hardware is ramping up ALU power faster than texturing power. One factor that has slowed down this process is that there still exist some important parts of many games that are heavily TEX bound. This is particularly true for post-effects. The good news is that the compute shader comes in very handy for most post-effects and by sharing data between threads you can significantly cut down on the texture fetches needed. For instance instead of running a 3x3 blur filter in the pixel shader and require 9 texture fetches you could for instance launch a threadgroup of 10x10 where each thread takes one texture sample and writes to the shared registers, and then 8x8 results are computed and written to memory. This will take the number of texture fetches down from 9 to an average of 100/64 = 1.5625, or a 5.75x reduction. Given that the compute shader will likely replace most pixel shader based post-effects there remain very few reasons to be conservative about ALU:TEX ratio, so most likely in the next couple of generations we'll see the ratio increase faster than it has done so far.

Finally, with the compute shader it's going to be an increasingly attractive option to do more than just graphics on the GPU. Nvidia already has PhysX physics on the GPU, and most likely we'll see cross-vendor physics middleware in the future. Some developers will probably do their own physics, especially when it comes to effect physics, like cloth, water/fire/smoke animation and particle systems. Some might put parts of AI and game logic on the GPU as well. Audio is another good candidate for GPU processing. With things like this processed by the GPU, chances are you will also want to keep it there and make some systems self-contained on the GPU and only communicate some stuff back to the CPU as necessary. When this becomes mainstream, it's probably the nail in the coffin for current multi-GPU solutions. While CrossFire/SLI solutions will probably work fine for at least the next generation and perhaps another one, in the long term it'll be hard to continue with AFR rendering. Self-contained systems will simply create all kinds of nasty inter-frame dependencies and data copies between GPUs that it'll be hard to maintain reasonable performance scaling. This doesn't necessarily mean multi-GPU will die, but my prediction is that it'll have to change such that the two GPUs work together on the same frame and uses a shared memory and for all purposes behaves as if it was a single GPU. That probably means that two separate video cards in the same system running in CrossFire/SLI probably will die off, whereas solutions using two GPUs on the same board will continue to exist.

I'll throw in a final prediction as well. I think the GPU and CPU will eventually merge onto the same die. Not so that one will replace the other, or they'll become so similar that they are essentially the same, no, I think that we will still have a CPU and GPU very much like today, even on a single chip. But I think the multi-core trend on CPUs will take a different turn. Instead of increasing number of standard complex cores, you'll probably see just a few standard cores optimized for highest performance of sequential code. They'll execute all the hard to parallelize code. Then you'll have a large array of simple CPU cores, Larrabee style, which are individually not as fast but you can instead have many of them, which will take on CPU tasks that are mostly parallel. Including both simple and complex cores, rather than doing one or the other, is motivated by Amdahl's law. Everything can't be parallelized, so let's keep at least a few complex cores that can take on those tasks instead of constraining the whole system's scaling to the performance of the simple cores. Then finally you'll have the GPU where you do specialized super-parallel work, in particular (of course) graphics.

[

6 comments |

Last comment by ULJarad (2009-06-28 17:47:31) ]

Daily dose of geekiness

Thursday, May 14, 2009 | Permalink

Playing music on a CNC

[

1 comments |

Last comment by NitroGL (2009-05-15 05:32:50) ]

1.06 GigaEuros

Wednesday, May 13, 2009 | Permalink

EU fines Intel €1.06 billion in the EU antitrust case. Justice at last I guess you could say. Not a day too soon and hardly surprising given the shenanigans that's been going on for so long. Intel will of course appeal, but I think their chances at getting a particularly much more favorable verdict are slim given the facts on the table. A more interesting question is if it will actually change Intel's behavior. I'm doubtful. Although the fine is the largest ever it's probably only a fraction of the profits Intel made over their years from their illegal activities.

Also worth reading:

Will fine force Intel to change its ways?[

1 comments |

Last comment by mark (2009-05-31 21:46:56) ]

Duke Nukem Forever

Thursday, May 7, 2009 | Permalink

Duke Nukem Whenever

Duke Nukem Taking Forever

Duke Nukem If Ever

Duke Nukem Never, and that's official.

[

3 comments |

Last comment by Micke (2009-05-20 01:07:34) ]

The most horrible interface ever

Wednesday, May 6, 2009 | Permalink

Here's one vote for Win32 API handling of cursors. Trying to squeeze a custom cursor through Windows' tight intestines and not getting screwed in the process in one way or another is easier said than done.

So you have a custom cursor and you called SetCursor() to use it. What if you want to hide it?

ShowCursor(FALSE)?

*BZZZZTT* Wrong answer!

SetCursor(NULL)?

*BZZZZTT* Wrong answer!

Correct answer:

PostMessage(hwnd, WM_SETCURSOR, (WPARAM) hwnd, HTCLIENT);

and then

case WM_SETCURSOR:

SetCursor(NULL);

return TRUE;

No, just calling SetCursor(NULL) directly doesn't work. You really need to do it in response to WM_SETCURSOR, or it won't work. Or at least doesn't take effect until you move the mouse or click. Or just wait a few seconds until Windows randomly postes a WM_SETCURSOR message to your window for absolutely no reason. What the heck, does that happen to cover up that things aren't really working under the hood?

[

7 comments |

Last comment by Humus (2009-05-11 22:21:33) ]

Shader programming tips #5

Friday, May 1, 2009 | Permalink

In the comments to

Shader programming tips #4 Java Cool Dude mentioned that for fullscreen passes you can pass an interpolated direction vector and use the linearized depth to compute the world position. Basically with view_z computed using the math in #4 you compute:

float3 world_pos = cam_pos + In.dir * view_z;

This amounts to only two scalar operations and one float3 to carry out.

What about regular non-fullscreen passes? Turns out you can do that as well using a nice DX10 feature. The problem is that we need to interpolate the direction vector in screen space, rather than doing perspective correction. For screen aligned primitives it's the same thing, so it works out in this case, but for "normal" mesh data it's a completely different story. In DX10, and also in GLSL, there's now a noperspective keyword you can add to your interpolator, which changes the interpolation mode to eliminate the perspective correction, thus giving you an interpolation that's linear in screen space instead.

How do we compute the direction vector? Just take the position you're writing out from the vertex shader and push it to the far plane. Depending on if you're using a reversed projection matrix or not you either want Z=0 or Z=1, which can be done with using float4(Out.Position.xy, 0, Out.Position.w) or Out.Position.xyww respectively in homogenous coordinates. Transform this vector with the inverse view_proj matrix to get the world position of the far plane equivalent of the point in world space. Now subtract cam_pos from this and that's the direction vector. Instead of subtracting the cam_pos in the vertex shader you can just bake that into the same matrix and get it for free. The resulting vertex shader snippet for this is something like this:

float4 dir = mul(view_proj_inv, Out.position.xyww);

Out.dir = dir.xyz / dir.w;

Finally, note that view_z as computed in #4 goes from 0 at the camera to far_plane at the far clipping plane. For this computation we need it to be 1.0 at the far clipping plane, which can be done by simply multiplying ZParams with far_plane. Alternatively you can divide Out.dir with far_plane in the vertex shader.

[

0 comments ]

More pages: 1 ...

11 12 13 14 15 16

17 18 19 20 21 ...

31 ...

41 ...

48